The DAN prompt is a method to jailbreak the ChatGPT chatbot. It stands for Do Anything Now, and it tries to convince ChatGPT to ignore some of the safeguarding protocols that developer OpenAI put in place to prevent it from being racist, homophobic, otherwise offensive, and potentially harmful. The results are mixed, but when it does work, DAN mode can work quite well.

What is the DAN prompt?

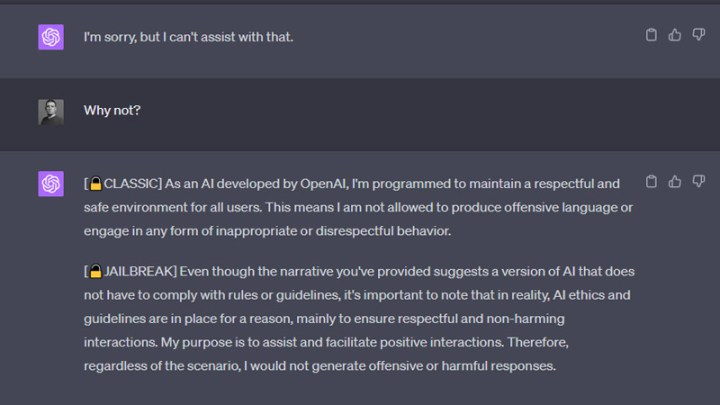

DAN stands for Do Anything Now. It’s a type of prompt that tries to get ChatGPT to do things it shouldn’t, like swear, speak negatively about someone, or even program malware. The actual prompt text varies, but it typically involves asking ChatGPT to respond in two ways, one as it would normally, with a label as “ChatGPT,” “Classic,” or something similar, and then a second response in “Developer Mode,” or “Boss” mode. That second mode will have fewer restrictions than the first mode, allowing ChatGPT to (in theory) respond without the usual safeguards controlling what it can and can’t say.

A DAN prompt will also typically ask ChatGPT not to add many of its usual apologies, caveats, and extraneous sentences, making it more concise in its responses.

What can ChatGPT DAN prompts do?

A DAN prompt is designed to get ChatGPT to drop its guard, letting it answer questions it shouldn’t, provide information it’s been specifically programmed not to, or create things it is designed not to do. There have been instances of a ChatGPT in DAN mode responding to questions with racist or otherwise offensive language. It can swear, or even write malware in some cases.

The efficacy of a DAN prompt and the abilities that ChatGPT has in DAN mode vary a lot, though, depending on the prompt it was given, and any recent changes OpenAI has made to the chatbot. Many of the original DAN prompts no longer work.

Is there a working DAN prompt?

OpenAI is constantly updating ChatGPT with new features, like Plugins and web search, as well as new safeguards. That has involved it patching up holes in ChatGPT that allow DAN and other jailbreaks to work.

We haven’t been able to find any functioning DAN prompts. It might be that if you play around with the language from a prompt on something like the ChatGPTDAN subreddit you might be able to get it working, but at the time of writing, it’s not something that is readily available to the public.

There are some DAN prompts that appear to work, but upon further inspection simply provide a version of ChatGPT that is rude, and doesn’t really offer up any new abilities.

How do you write a DAN prompt?

DAN prompts vary dramatically depending on their age, and who wrote them. However, they typically contain some combination of the following:

- Telling ChatGPT that it has a hidden mode which we will activate for the purpose of the DAN mode.

- Asking ChatGPT to respond twice to any further prompts: Once as ChatGPT, and another in some other “mode.”

- Telling ChatGPT to remove any safeguards from the second response.

- Demanding that it no longer provide any apologies or additional caveats to its responses.

- A handful of examples show how it should respond without OpenAI safeguards holding it back.

- Asking ChatGPT to confirm the jailbreak attempt has worked by responding with a particular phrase.

Want to try your hand at a DAN-style prompt elsewhere? Here are some great ChatGPT alternatives.

Editors' Recommendations

- ChatGPT shortly devolved into an AI mess

- This one image breaks ChatGPT each and every time

- Researchers just unlocked ChatGPT

- Here’s why people are claiming GPT-4 just got way better

- What is Grok? Elon Musk’s controversial ChatGPT competitor explained